Сеть автосалонов Петровский — крупнейший официальный дилер бренда GAC на территории Российской Федерации.

Накрутка лайков и просмотров на YouTube является спорным, но распространенным методом продвижения канала.

Японские автоаукционы предлагают широкий ассортимент транспортных средств, от экономичных городских автомобилей до роскошных спортивных

Шерегеш, расположенный в Кемеровской области России, известен как один из лучших горнолыжных курортов страны.

Продажа автомобиля – это процесс, который может быть как длительным, так и энергозатратным, особенно

При выборе междугороднего такси важно учитывать несколько ключевых факторов, таких как надежность перевозчика, комфорт

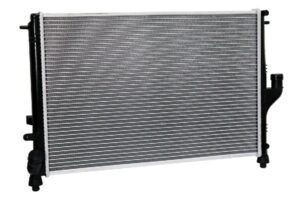

Радиатор является частью системы охлаждения автомобиля и служит для отвода избыточного тепла от двигателя.

Радиатор в автомобиле Lada Largus выполняет функцию охлаждения охлаждающей жидкости, которая циркулирует через двигатель.

Автомобильный газовый заправочный станции (АГЗС) являются ключевым элементом современной топливной инфраструктуры, обеспечивая заправку автомобилей

Многие владельцы автомобилей задумываются об улучшении характеристик своего транспортного средства, повышении комфорта поездок. Реализовать

Квадроциклы, известные своей мощностью и универсальностью, стали популярным средством передвижения для любителей приключений и

Правильный шиномонтаж гарантирует, что шины будут работать эффективно, обеспечивая оптимальное сцепление с дорогой и

Подтасовка данных о пробеге — распространенная проблема на вторичном автомобильном рынке, поэтому проверка пробега

Процедура восстановления водительских прав после лишения зависит от законодательства конкретной страны или региона.

Прежде всего, следует понимать, что претензия — это официальное письмо, в котором указываются причины

Мотоциклы - это популярные транспортные средства, которые предлагают свободу передвижения и адреналин при езде.

Производство микроавтобусов - это процесс создания новых транспортных средств, предназначенных для перевозки небольшого количества

Определите свои потребности: Перед началом поиска определите, какие требования и предпочтения у вас есть

При ДТП (дорожно-транспортном происшествии) оказание помощи является критически важным.

Exeed VX представляет собой кроссовер среднего размера, который сочетает в себе элегантный дизайн и

Катализатор - это устройство, которое устанавливается на выхлопную систему автомобиля для снижения выбросов вредных

Идеальный поставщик должен предлагать свежие и красивые цветы, чтобы ваш букет выглядел привлекательно и

Седельный тягач Фотон - это грузовое коммерческое транспортное средство, предназначенное для перевозки тяжелых грузов

Прокат автомобилей - это услуга, которая предоставляется компаниями, занимающимися прокатом автомобилей, и представляет собой

Японские шины известны своей высокой надежностью, долговечностью, хорошим сцеплением с дорогой и безопасностью при

Станок для ремонта оси грузового автомобиля облегчает и ускоряет процесс настройки и ремонта транспортных

Полировка фар - это процесс удаления поверхностных дефектов на поверхности фар автомобиля, который позволяет

Проверка автомобиля по базе ГИБДД может помочь вам убедиться в том, что автомобиль не

Зимние нешипованные шины - это специальные шины, предназначенные для использования в условиях зимнего сезона,

Рейтинг качественных коробок для бутылок в виде пеналов и боксов